Final: Anime/Manga-Style Filter with PoseNet and Style Transfer

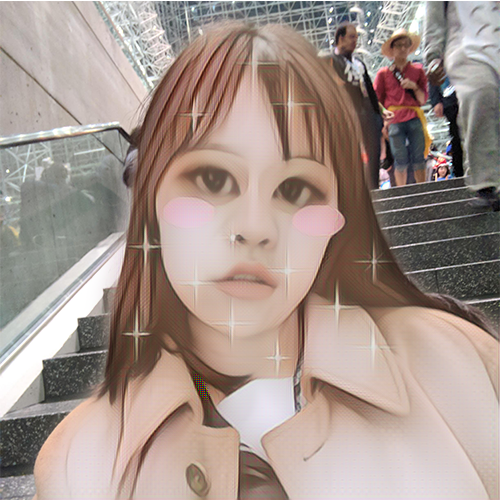

I made a selfie filter that adjusts a photo in the browser with style transfer and poseNet models with ml5. The filter mimicks the art style in anime and manga (Japanese animation and comics), transforming a photo of the user to a character in a comic. Similar to filter apps like Meitu, the webpage will take in a user’s photo, process it, and then allow them to download it.

I made a selfie filter that adjusts a photo in the browser with style transfer and poseNet models with ml5. The filter mimicks the art style in anime and manga (Japanese animation and comics), transforming a photo of the user to a character in a comic. Similar to filter apps like Meitu, the webpage will take in a user’s photo, process it, and then allow them to download it.

Style Transfer

First, I tested different models of style transfer to find one that would best give an ‘anime style’ to a photo. I wasn’t sure how different styles of anime drawings would translate to style transfer models so I tried different types of ‘anime style’ depictions. I think the first one gave the best result although I like the 4th one too. I used this method to train a style transfer model remotely with Spell and convert to ml5.

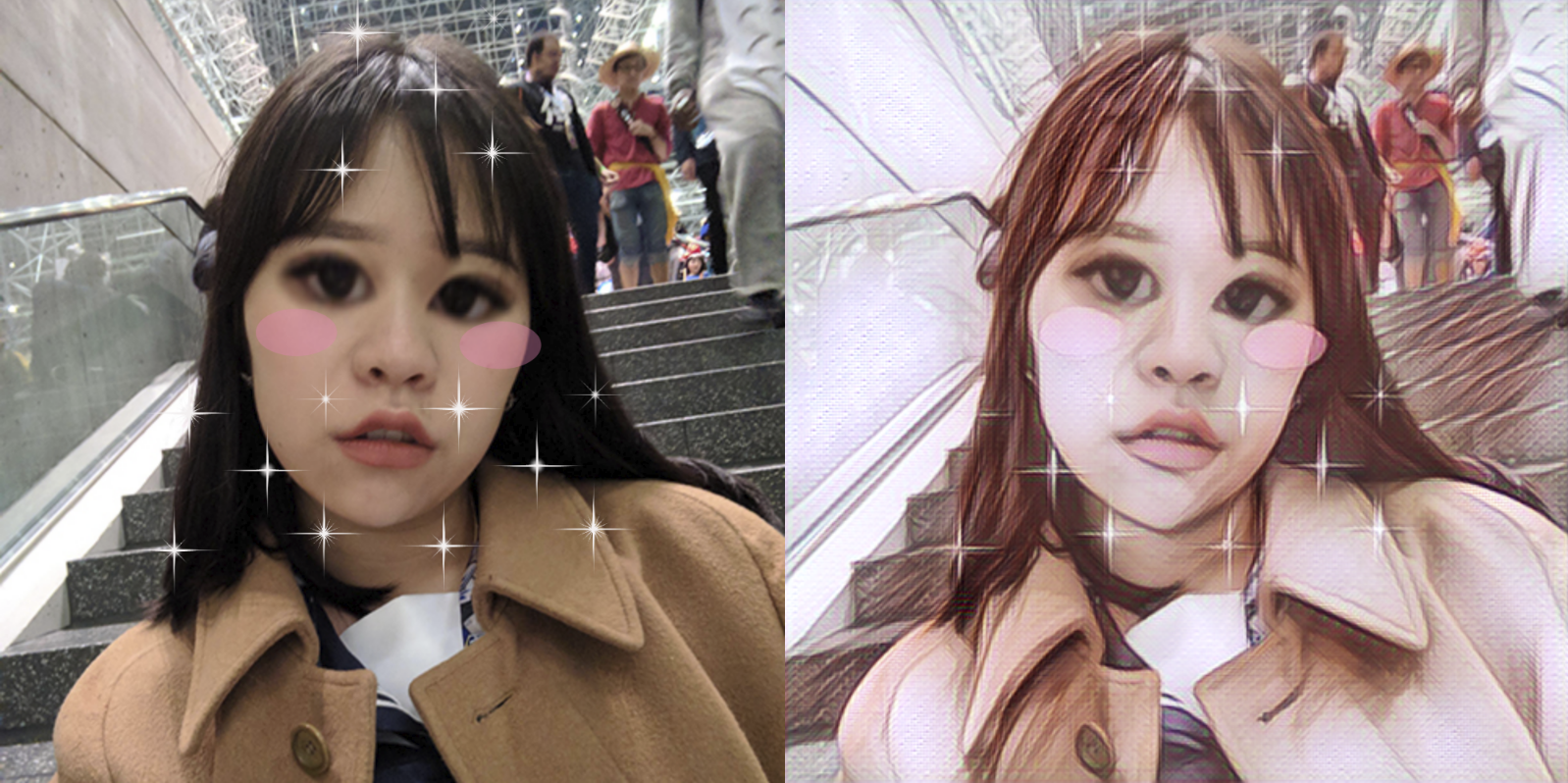

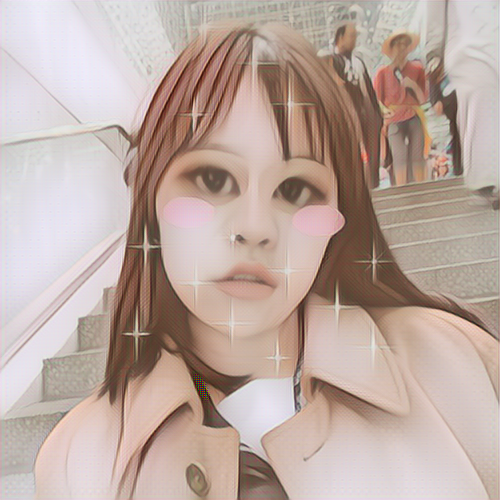

As you can see, style transfer doesn’t change the size and shape of the face. So even if the drawings have exaggerated eyes, the style transfer model won’t. Yining, my teacher, suggested I use poseNet to enlarge the eyes and add other elements. I photoshopped the original photo with the effects I wanted to test how they would look like after being style transfered.

Pose Net

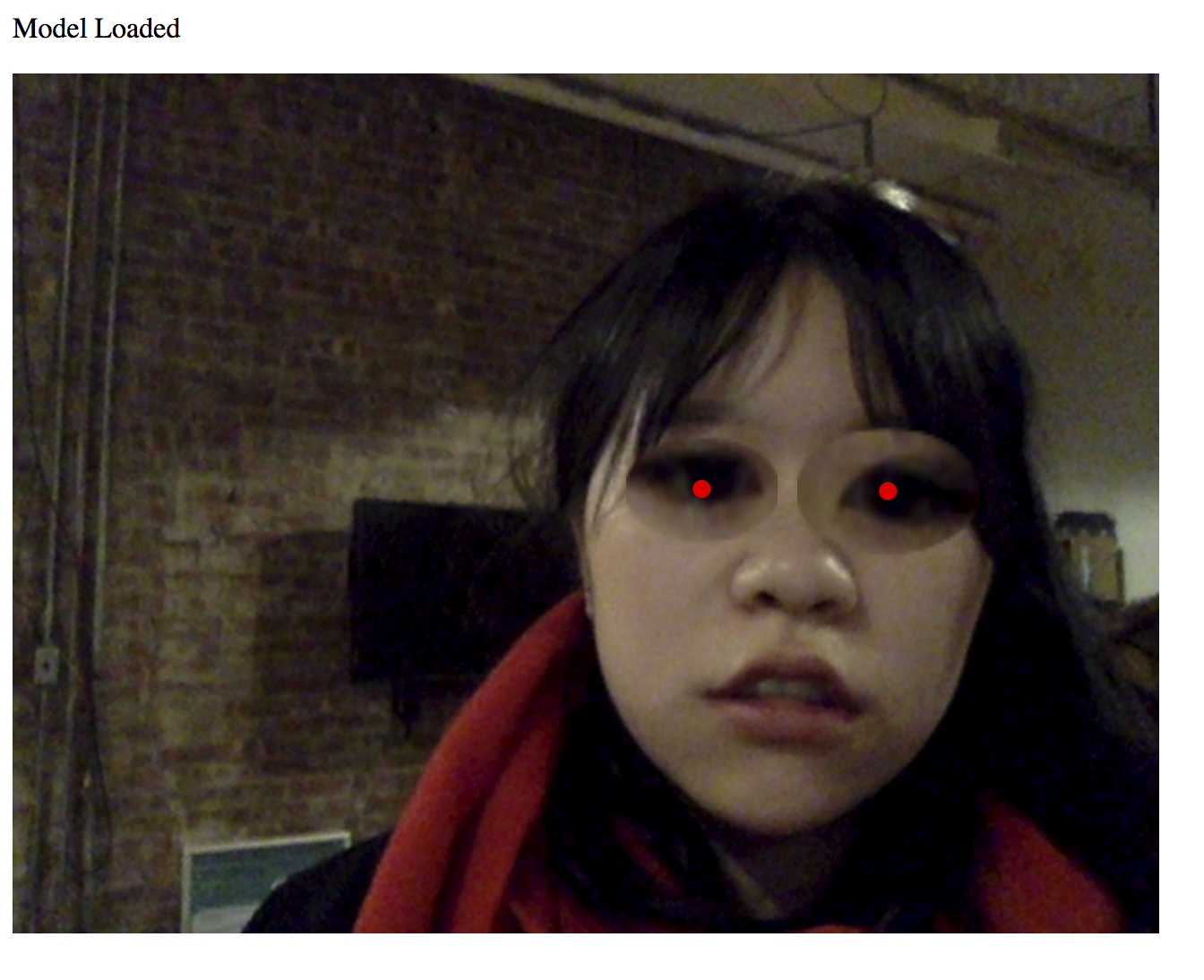

I used poseNet with ml5 to detect the eyes and nose of the person in the photo. I then used those points to relatively enlarge the eyes and place blush and sparkles. I started using poseNet on a webcam video stream to test and then moved to an image input.

The blush are ellipses drawn relative to the eyes and nose keypoints. The sparkles are just a transparent png image.

I then saved frames of the canvas and created an image of the first frame. I fed that image into the style transfer model to get the final result.

The results are a big scary. Mainly because of the rough edges of the enlarge eyes. I would like to use p5.mask with a radial gradient circle image instead of just drawing an ellipse in the future to blend the layers better.

Testing

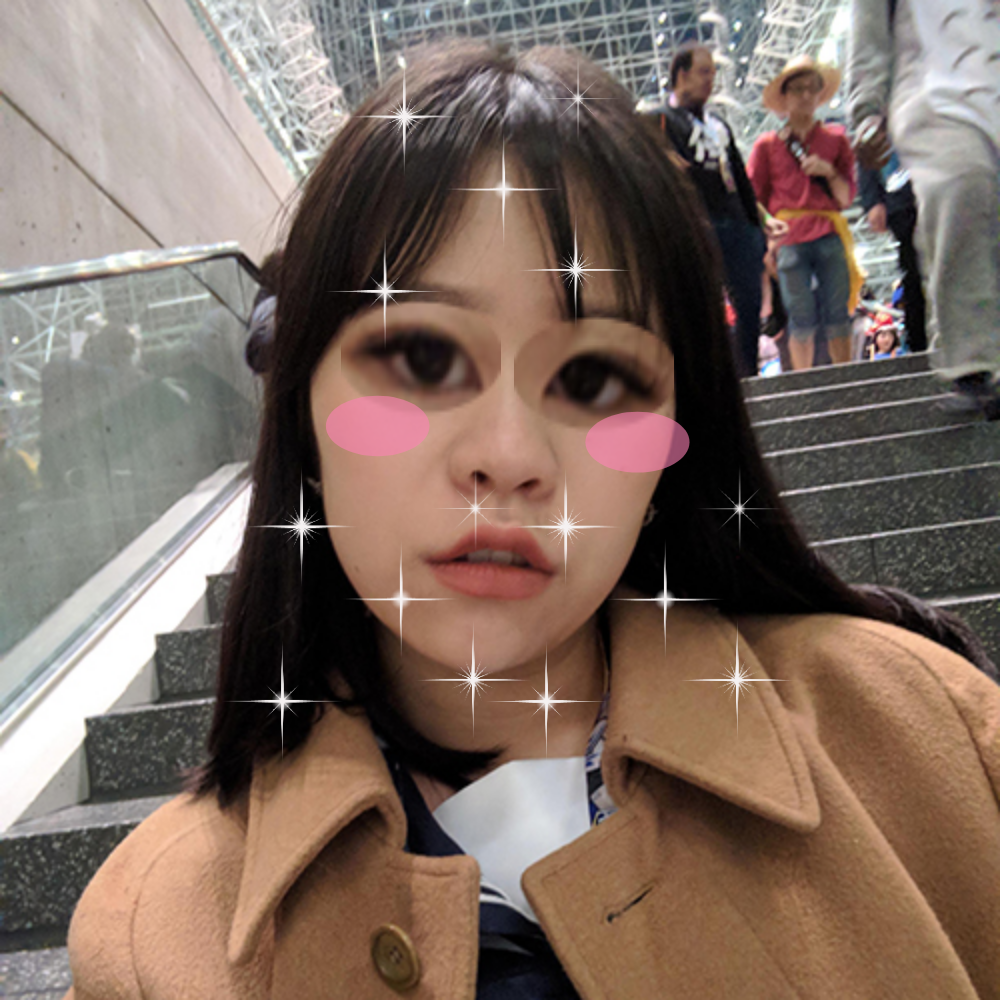

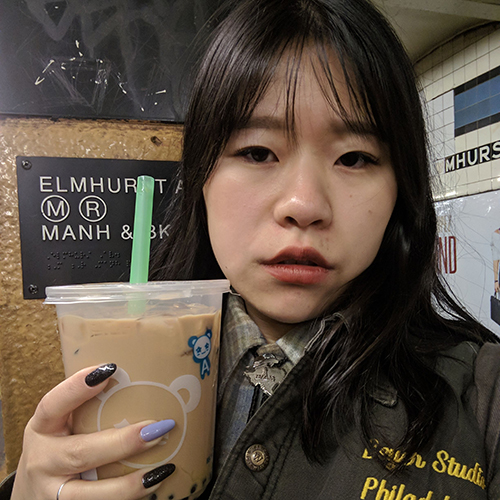

I tested with another image of myself to see if the results would be comparable. The original image must be 500 by 500 pixels right now.

It works the same as the first image I tried but it’s still scary.

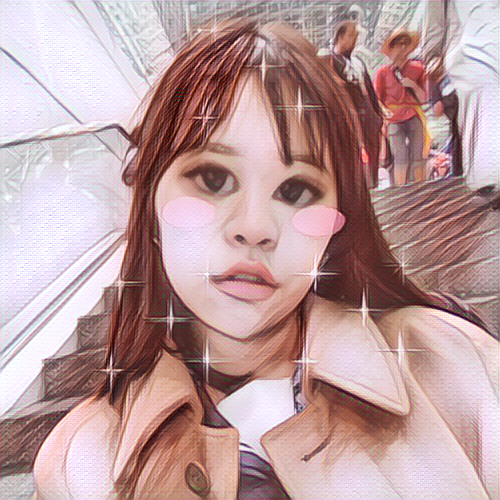

Edit 12/18/18: Improvements

I worked on other aspects of this filter for another class and was able to improve the blending aroud the eyes with a png mask file.

My final results as of now with different style transfer models:

It works with more than 1 pose too if you change the poseNet function call from poseNet.singlePose(img); to poseNet.multiPose(img);.

To Do

Originally, I also wanted to use body-pix to detect the silhouette of the figure and remove the background but I ran out of time. This is so I can have the filtered person in the original photo-realistic environment or just replace the background with something else completely.

Photoshopped idea of what I was going for:

Most importatnly, I want people to upload a photo and then be able to download it. The user interface needs to be built. I would also like them to be able to choose between single pose or multiple poses and different style transfer models.

One issue I thought of is that this model probably won’t work well for people with darker skin tones. This is a similar issue that the Meitu app had too. Maybe I will need to use a different type of style transfer or experiment with parameters so that the colors of the style are not applied as strongly.

Links

PoseNet single pose image example

images used for this test are by

images used for this test are by